LOW RESOURCE AUDIO CODEC (LRAC) CHALLENGE

Official Rules

Last Revised: October 9, 2025 (revisions since July 21, 2025 are marked in purple)

Table of Contents

- SPONSOR

- ENTRY PERIOD

- PARTICIPANT ELIGIBILITY

- HOW TO ENTER

- ELIGIBLE ENTRY

- TECHNICAL AND PROCESSING REQUIREMENTS

- USE OF ENTRIES

- WINNER SELECTION AND NOTIFICATION

- ODDS

- GENERAL CONDITIONS AND RELEASE OF LIABILITY

- GOVERNING LAW

- PRIVACY

SPONSOR

These Official Rules (“Rules”) govern the operation of the Low Resource Audio Codec (LRAC) Challenge (the “Challenge”). This Challenge is sponsored by Cisco Systems, Inc., 170 West Tasman Drive, San Jose, CA 95134 USA (“Cisco,” or “we”). The purpose of this Challenge is to foster the exchange of diverse ideas across the research community and accelerate the development of practical telecommunication solutions—particularly for low-end devices—by driving innovation in low-resource neural audio coding focused on low compute, low latency, and low bitrate methods.

ENTRY PERIOD

The Challenge operates in distinct phases. The Challenge (the “Challenge”) officially opens on August 1, 2025 and concludes with the announcement of results on October 14, 2025 (the “Challenge Period”). Entry into this Challenge is acceptance of these Official Rules. The key dates for participation are:

- Registration Period: July 10, 2025 – September 9, 2025.

- Development Phase (Open Test Set Submissions): August 1, 2025 – September 30, 2025. Participants can submit processed outputs for objective evaluation and leaderboard updates during this period.

- Test Phase (Withheld/Blind Test Set Submissions): October 1–14, 2025

- Blind Test Set Release: September 30, 2025.

- Blind Test Submission Deadline: October 1, 2025. This is the submission for final evaluation.

- Result Announcement: October 14, 2025.

All times for the dates indicated throughout are by 11:59 pm Pacific Time. Dates and times are subject to change.

PARTICIPANT ELIGIBILITY

This Challenge is open to participants (“Participants” or “you”) who are age 18 years or older at the time of entry, across academia and industry, as well as individual contributors. Participants who are employees or internally contracted vendors of governments and government-affiliated companies or organizations are not eligible for any monetary grants. Participants can enter as an individual, or as a team or group. Each team may consist of one or more individuals.

This Challenge is not open to:

- Employees or internally contracted vendors of Cisco or its parent/subsidiaries, agents and affiliates.

- The immediate family members or members of the same household of any such employee or vendor.

- Anyone professionally involved in the development or administration of this Challenge.

- Any employee whose employer’s guidelines or regulations do not allow entry in the Challenge.

This Challenge is not open to participants in the province of Quebec in Canada. In addition, residents of Cuba, Iran, Syria, North Korea, Myanmar (formerly Burma), Russia, the occupied regions of Ukraine and Belarus are not eligible to participate. This Challenge is void in these countries and where otherwise prohibited or restricted by law.

HOW TO ENTER

To enter and participate in the Challenge, you will follow a multi-stage process:

1. Registration

To participate, your team must designate a delegate responsible for registration, correspondence, and submissions.

- Request: The team delegate must submit a registration request via the online form available at https://lrac.short.gy/registration-request-form. Detailed instructions are provided within the form itself. Registration requests may be submitted until September 9, 2025.

- Review: Your request will be reviewed by the organizers.

- Response: You will receive a response regarding your request status via the email you provided in the registration request form.

- Account Creation: You will receive instructions on how to create an account on the leaderboard website after your registration request is accepted by the organizers.

- Enrolment: Following creation of the leaderboard account, you will be able to submit an enrolment request for the challenge track(s) on the leaderboard website. Your challenge registration will be complete upon approval from the organizers.

- Information: Once registered, you will also be provided with the expected submission format, detailed submission guidelines, and a validation tool to help you validate your submissions.

2. Development Phase (Open Test Submissions)

During the Challenge runtime (August 1, 2025 – September 30, 2025), participants are encouraged to develop their speech coding models. During this period, you can process the open test set stimuli (a synthetic test set including reference clean signals – to be provided by the organizers) using the models you are developing and submit the processed audio outputs for evaluation using objective metrics only.

- Evaluation: Submissions during the development phase will be evaluated using intrusive and/or non-intrusive objective metrics on clean, and synthesized noisy and reverberant stimuli.

- Leaderboard: The results of the evaluations will be displayed on the leaderboard website. You will be permitted to make multiple submissions corresponding to revisions of your work and select which result to render in the leaderboard. All challenge submissions must be made through the leaderboard website.

Note: the results from objective metrics are for advisory purposes only and will not be used in final ranking or winner selection.

Details of metric selection will be provided on: https://lrac.short.gy/evaluation

3. Blind Test Phase (Final Submission)

This is the final evaluation phase.

- Blind Test Set Release: The blind test set (real-world test stimuli without reference signals) will be released on September 30, 2025.

- Submission Deadline: Your final Entry must be submitted by October 1, 2025.

- Entry Content: Your Entry shall include:

- The complete system audio outputs on the provided blind (withheld) test set for multiple bitrates as outlined in https://lrac.short.gy/evaluation.

- The submission must match the file and folder structure of the input set provided by the organizers as confirmed by the validation tool.

- A detailed minimum 2-page + references system description paper (“Entry Paper”) describing your speech coding-only (tack 1) and/or joint coding-enhancement (track 2) models and the methodology behind them, including:

- Training data description, potentially including curation, augmentation, and splits

- Model architecture, loss functions

- Hyperparameter tuning

- Any pre-trained models used

- Any training or finetuning stages

- Compute complexity, latency, number of parameters, and the actual bitrates used for each system submitted

- Evaluation process

- Checkpoint selection strategy (if applicable)

- Optional but encouraged: Public release of model checkpoints and/or source code for reproducibility

- The organizers will provide a sample paper for the baseline system.

- Teams with submissions for both tracks are permitted to submit a single system description paper covering solutions for both tracks or separate papers covering each solution.

- The content of the Entry Paper will further the Challenge purpose of advancing low-resource neural audio coding, specifically targeting low compute, low latency, and low bitrate methods, by promoting exchange of ideas and accelerating innovation for development of practical telecommunication solutions, including for low-end devices.

The blind test phase submissions will be evaluated using crowdsourced listening tests to determine overall ranking for each track and to select winner(s) for each track, as detailed under WINNER SELECTION AND NOTIFICATION.

Cisco is not responsible for late, lost, delayed, damaged, misdirected, incomplete, void, corrupted, and/or unintelligible entries, or for any problems, bugs or malfunctions Participants may encounter when submitting their Entry. Only complete, valid, intelligible entries will be accepted. Participants must provide all information requested to be eligible to win. Cisco reserves the right to disqualify false entries or entries suspected of being false.

Once submitted, an Entry cannot be deleted or cancelled.

ELIGIBLE ENTRY

To be eligible, an Entry must meet the following content/technical requirements:

- The audio files you submit must result from the speech coding model(s) described in your Entry Paper

- Your Entry must be your own original work

- Your Entry cannot have been selected as a winner in any other contest

- Noise robustness front (or back) ends are excepted, while noting that systems employing those are still subject to the requirements outlined under: TECHNICAL AND PROCESSING REQUIREMENTS

- You must have obtained all consents, approvals, or licenses required for you to submit your Entry

- To the extent that Entry requires the submission of user-generated content such as software, photos, videos, music, artwork, essays, etc., entrants warrant that their Entry is their original work, has not been copied from others without permission or apparent rights, and does not violate the privacy, intellectual property rights, or other rights of any other person or entity

- Your Entry may NOT contain, as determined by Cisco in our sole and absolute discretion, any content that is obscene or offensive, violent, defamatory, disparaging or illegal, or that promotes alcohol, illegal drugs, tobacco or a particular political agenda, or that communicates messages that may reflect negatively on the goodwill of Cisco

- Your Entry must NOT include clips processed using speech coding, enhancement or other methods that are not part of your Entry described in your Entry Paper and your submission to this Challenge

TECHNICAL AND PROCESSING REQUIREMENTS

Participants must submit systems that meet the following technical requirements:

- Sampling rate and number of channels: Systems must support mono (single) audio input and output at 24 kHz sampling rate.

- Bitrate: Constant bitrate systems only are permitted. No variable or adaptive bitrate coding or entropy coding optimization is allowed. A single system must support both of the following modes: - Ultralow bitrate mode: budget up to 1 kbps - Low bitrate mode: budget up to 6 kbps - The same decoder must be capable of supporting both modes and a mixture of the modes within a single inference run.

- Latency: The total latency must be equal to or lower than: - Track 1 – transparency codecs: 30 ms - Track 2 – speech enhancement codecs: 50 ms - with the total latency defined as: total latency = algorithmic latency + buffering latency - where algorithmic latency is the delay introduced by the processing algorithms and their internal operations, excluding buffering, while the buffering latency is the delay resulting from processing audio in fixed-size blocks or frames.

- Compute complexity: - Track 1 - transparency codecs: Total compute ≤ 700 MFLOPS; Receive-side compute ≤ 300 MFLOPS. - Track 2 – speech enhancement codecs: Total compute ≤ 2600 MFLOPS; Receive-side compute ≤ 600 MFLOPS.

Training and Data Usage: Training, validation, and hyperparameter tuning must be performed strictly on the designated challenge speech and noise datasets listed on the challenge website: https://lrac.short.gy/datasets.

- Use of publicly available pre-trained models (e.g., HuBERT, Wav2Vec) is allowed only if they were publicly available before the challenge start date. These models may be fine-tuned only on the allowed challenge datasets.

- Prohibited:

- Using any other datasets for training, fine-tuning, or hyperparameter tuning.

- Using open or blind test data for any purpose other than evaluation, that is, the open and blind test data must not be e.g. used for training or fine-tunning of the models.

- Techniques like domain adaptation, self-training, or test-time adaptation involving the dev/test data.

- To ensure fair evaluation, models with long past-context receptive fields must not have access to the entire test utterance or repeated versions of it within their receptive window. Techniques such as concatenating multiple copies of the test utterance, reflective/mirrored padding, or any method that artificially repeats or extends the test input are strictly prohibited. Instead, models should employ neutral padding strategies, such as zero padding, when necessary.

Processing Rules:

- Test utterances can be processed via a single-pass or iterative approaches. If the system uses iterative processing (e.g., either with multiple passes through the same network or using multi-step models such as diffusion models), the compute complexity and latency totals of the system must be the respective sums of the complexities and latencies of all the individual iterations and steps, and must be within the constraints of the challenge outlined under TECHNICAL AND PROCESSING REQUIREMENTS.

- Any system architecture is allowed (e.g., traditional, neural, hybrid) as long as all challenge rules are met.

- Systems may be: (i) end-to-end enhancement speech codecs, (ii) traditional codecs with pre-/post-processing, (iii) any other valid configuration.

USE OF ENTRIES

Cisco is not claiming ownership rights to your Entry. However, By submitting an Entry, you automatically grant Cisco an irrevocable, royalty-free, worldwide, transferable and sublicensable license to use, copy, review, assess, test and otherwise analyse your Entry and all its content in connection with this contest and use, copy, make, sell, modify and distribute your Entry in any media whatsoever now known or later invented for any non-commercial or commercial purpose, including, but not limited to, the development, marketing and sale of Cisco products or services, without further permission from you. For the avoidance of doubt, use of your Entry in any media whatsoever includes use at the 2026 Low-Resource Audio Codec (LRAC) Workshop, an ICASSP 2026 Satellite Workshop, on May 4, 2026, and in workshop associated materials, including the workshop website. You will not receive any compensation or credit for use of your Entry, other than what is described in these Official Rules. By entering you acknowledge that Cisco may have developed or commissioned materials similar or identical to your Entry and you waive any claims resulting from any similarities to your Entry. Further you understand that Cisco will not restrict work assignments of representatives who have had access to your Entry, and you agree that use of information in Cisco representatives’ unaided memories in the development or deployment of our products or services does not create liability for us under this agreement or copyright or trade secret law. Cisco retains the right to use, review, assess, and analyze all submitted data, including audio files, for any purpose, at any time, without requiring additional permissions. Cisco does not claim ownership of your models but reserves the right to use submitted test data and results for evaluation, publication, and analysis purposes.

Your Entry may be posted on a public website. Cisco is not responsible for any unauthorized use of your Entry by visitors to a public website. Cisco may use the Challenge (including any submission) for publicity, advertising or other marketing purposes, in any media, and may use the name, likeness, and hometown name of Participants as part of that publicity, without additional compensation to the Participants. Cisco is not obligated to use your Entry for any purpose, even if it has been selected as a winning Entry.

WINNER SELECTION AND NOTIFICATION

Entries will be ranked based on the outcomes of crowdsourced listening tests performed on blind test set stimuli processed by participants’ systems. (For details on the evaluation process, see the crowdsourced evaluation battery table at: https://lrac.short.gy/evaluation.)

The evaluation will focus on the following criteria:

-

Track 1 – coding-only (transparency) codecs: - Quality on clean speech - Resilience towards light noise and mild reverb - Preservation of simultaneous talkers - Speech intelligibility in clean

-

Track 2 – speech enhancement capable codecs: - Quality on clean speech - Resilience toward light noise and mild reverb - Denoising performance - Speech intelligibility in clean - Speech intelligibility in noise

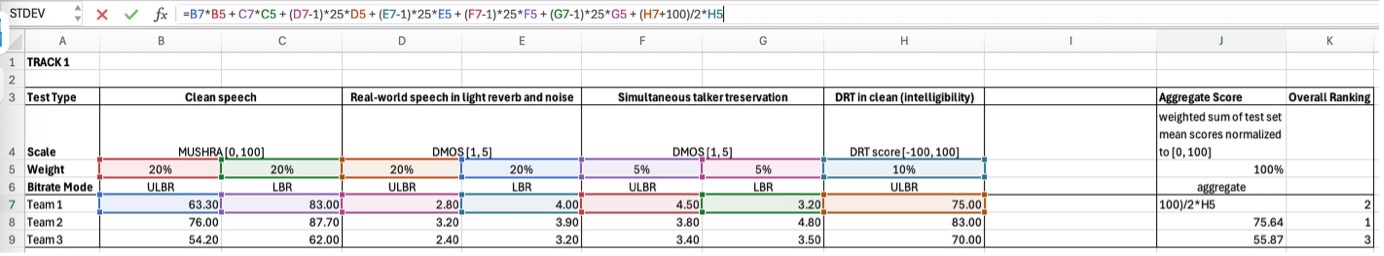

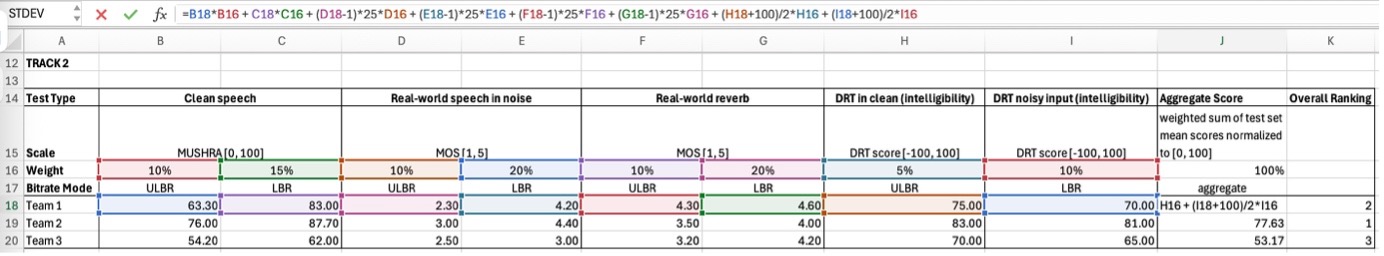

For each track, entries will be ranked based on the weighted results of individual crowdsourced assessments across multiple bitrates. The aggregate score for each entry in a given track will be calculated as a weighted sum:

Where $w_i$ is the weight associated with ith assessment out of $N$ assessments (with $N=7$ for Track 1 and $N=8$ for Track 2) and $r_i$ is the result of the ith assessment normalized to a range of $0…100$ as specified below.

- The raw scores in the crowdsourced evaluation battery tests have the following ranges:

- RAW_MUSHRA range: [0, 100]

- RAW_DMOS range: [1, 5]

- RAW_MOS range: [1, 5]

- W_DRT range: [-100, 100]

- For aggregate score calculation, the scores for each test are first normalized to a common range of [0, 100], as follows:

- MUSHRA = RAW_MUSHRA * 1: [0, 100]

- DMOS = (RAW_DMOS - 1) * 25: [0, 100]

- OS = (RAW_MOS - 1) * 25: [0, 100]

- DRT = (RAW_DRT + 100) / 2: [0, 100]

- The following test sets are considered:

- TR: Transparency

- RW: Real-world

- ST: Simultaneous Talker

- RWN: Real-world noise

- RWR: Real-world reverb

- DRTC: Diagnostic Rhyme Test in clean

- DRTN: Diagnostic Rhyme Test with input noise

- The following bitrate modes are considered:

- ULBR: Ultra-low bitrate (budget up to 1kbps)

- LBR: Low bitrate (budget up to 6kbps)

-

Given the above, the aggregate scores are calculated as follows:

- Track 1: Aggregate score calculation

- Track 2: Aggregate score calculation

Entries will be ranked according to their aggregate scores, with the highest aggregate score receiving the top rank, followed by the next highest, and so on. Additional details are available on the crowdsourced evaluation battery page: https://lrac.short.gy/evaluation. The winning team in each track will be the one whose entry achieves the highest rank.

In the event of a tie—where multiple entries achieve the same aggregate score (rounded down to two decimal places) and therefore share the same rank—the tie will be resolved by the judges using the following criteria:

- Lower compute complexity will receive a higher rank.

- If still tied, lower latency will receive a higher rank.

Pending eligibility verification, potential winners will be selected by Cisco, Cisco’s agent, or a qualified judging panel from among all eligible entries, based solely on the rankings determined by the aggregate scores from the crowdsourced listening test results as described above.

All decisions regarding winner determination and ranking made by the judges are final and binding.

Winners will be selected and notified by October 14, 2025. The challenge results—including individual scores, aggregate scores, overall rankings, and winners for each track—will be announced on October 14, 2025, and posted on the leaderboard website.

ODDS

The odds of winning depend on the total number and quality of eligible entries received.

GENERAL CONDITIONS AND RELEASE OF LIABILITY

To the extent allowed by law, by entering you agree to release and hold harmless Cisco and its respective parents, partners, subsidiaries, affiliates, employees, and agents from all liability or any injury, loss, or damage of any kind arising in connection with this Challenge. Without limiting the foregoing, everything on this site and in connection with this Challenge is provided “as is” without warranty of any kind, either express or implied, including but not limited to, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement.

The decisions of Cisco are final and binding. We reserve the right to cancel, change, or suspend this Challenge for any reason, including cheating, technology failure, catastrophe, war, or any other unforeseen or unexpected event that affects the integrity of this Challenge, whether human or mechanical. If the integrity of the Challenge cannot be restored, we may select winners from among all eligible entries received before we had to cancel, change or suspend the Challenge. If you attempt or we have strong reason to believe that you have compromised the integrity or the legitimate operation of this Challenge by cheating, hacking, creating a bot or other automated program or device, or by committing fraud in any way, we may seek damages from you to the full extent of the law and you may be banned from participation in future Cisco promotions.

The Challenge is subject to applicable federal, state and local laws, and these Official Rules. All Participants are solely responsible for compliance with any applicable laws, rules and regulations, contractual limitations and/or office or company policies, if any, regarding Participant’s participation in trade promotions.

In the event of a dispute as to the source of any Entry, the authorized account holder of the email address used to enter will be deemed to be the person making the Entry. The authorized “account holder” is the natural person assigned an email address by an Internet access provider, online service provider or other organization responsible for assigning email addresses for the domain associated with the submitted address. Cisco is not responsible for:

- Lost, late, misdirected, undeliverable, incomplete or indecipherable entries due to system errors or failures, or faulty transmissions or other telecommunications malfunctions and/or entries.

- Technical failures of any kind.

- Failures of any of the equipment or programming associated with or utilized in the Challenge.

- Unauthorized human and/or mechanical intervention in any part of the submission process or the Challenge administration.

- Technical or human error which may occur in the administration of the Challenge or the processing of entries.

- Other factors beyond Cisco’s reasonable control.

GOVERNING LAW

This Challenge will be governed by the laws of the State of California, and you consent to the exclusive jurisdiction and venue of the courts of the State of California for any disputes arising out of this Challenge.

PRIVACY

All personal information collected by Sponsor will be used for the administration of the Challenge and in accordance with Sponsor’s privacy policy. Any questions regarding privacy matters should be directed to the address set out above. Please refer to Sponsor’s privacy statement located at https://www.cisco.com/c/en/us/about/legal/privacy-full.html for important information regarding the collection, use and disclosure of personal information by Sponsor.

* * *